In this exclusive interview for QUEST Magazine, we sit down with Abeba Birhane, a renowned cognitive scientist and leading voice in AI ethics. With a PhD focused human cognition, Birhane has shifted her expertise toward auditing AI systems and datasets, uncovering biases and examining technology’s impact on society.

Her groundbreaking 2019 audit of ImageNet and 80 Million Tiny Images uncovered racist and misogynist labels, leading to MIT retracting the latter dataset and sparking global conversations about responsibility in AI development. In this discussion, Birhane delves into the persistent challenges of bias in AI, the critical role of human oversight, the need for robust global regulation, and practical steps needed to ensure ethical innovation.

QUEST: Thank you for joining us today, Abeba. Can you share a bit about your background and how you got into AI, particularly AI ethics?

Abeba Birhane: I am a cognitive scientist by training. During my PhD, I was interested in examining computational methods of human cognition such as problem-solving, causal reasoning, and development. As my PhD progressed, I became more focused on critically examining existing models—asking what assumptions they embed, how accurate they are, and whose perspectives they encode. This eventually led me to my current work: evaluating AI training datasets and models with the goal of bringing more rigor, clarity, and accountability.

QUEST: Your audits uncovered shocking biases in AI datasets. Were you surprised by the extent of these issues?

Abeba Birhane: I have conducted numerous audits examining how AI training datasets encode and exacerbate racism, sexism, and discriminatory behavior. While the results were not entirely surprising—since you often suspect problems as you work through datasets—seeing the extent of the issues clearly confirmed was still unsettling.

QUEST: Can you give us insight into the datasets you examined and how you conducted this work?

Abeba Birhane: Perhaps the most well-known project I worked on was with a collaborator Vinay Prabhu in 2019, where we examined computer vision datasets, especially ImageNet and the 80 Million Tiny Images dataset. While documenting the taxonomy of ImageNet, we realized it inherited its classification system from WordNet, which is known to be problematic. We then turned to another dataset 80 Million Tiny Images that also utilizes the WordNet taxonomy. TinyImages was hosted by MIT and we found thousands of images labeled with racist and offensive slurs. Our findings led to MIT retracting the dataset in 2020.

QUEST: How did these datasets end up with such biases? Were they manually labeled?

Abeba Birhane: No. The dataset curation process relied on WordNet as a taxonomy and automated web scraping based on WordNet’s semantic taxonomy between words not manual labeling. WordNet provided lexical categories organized into hierarchies for example of nouns. The automated system then scraped the web for images tagged with those words. The problem was the lack of human oversight: offensive or racist associations were also included.

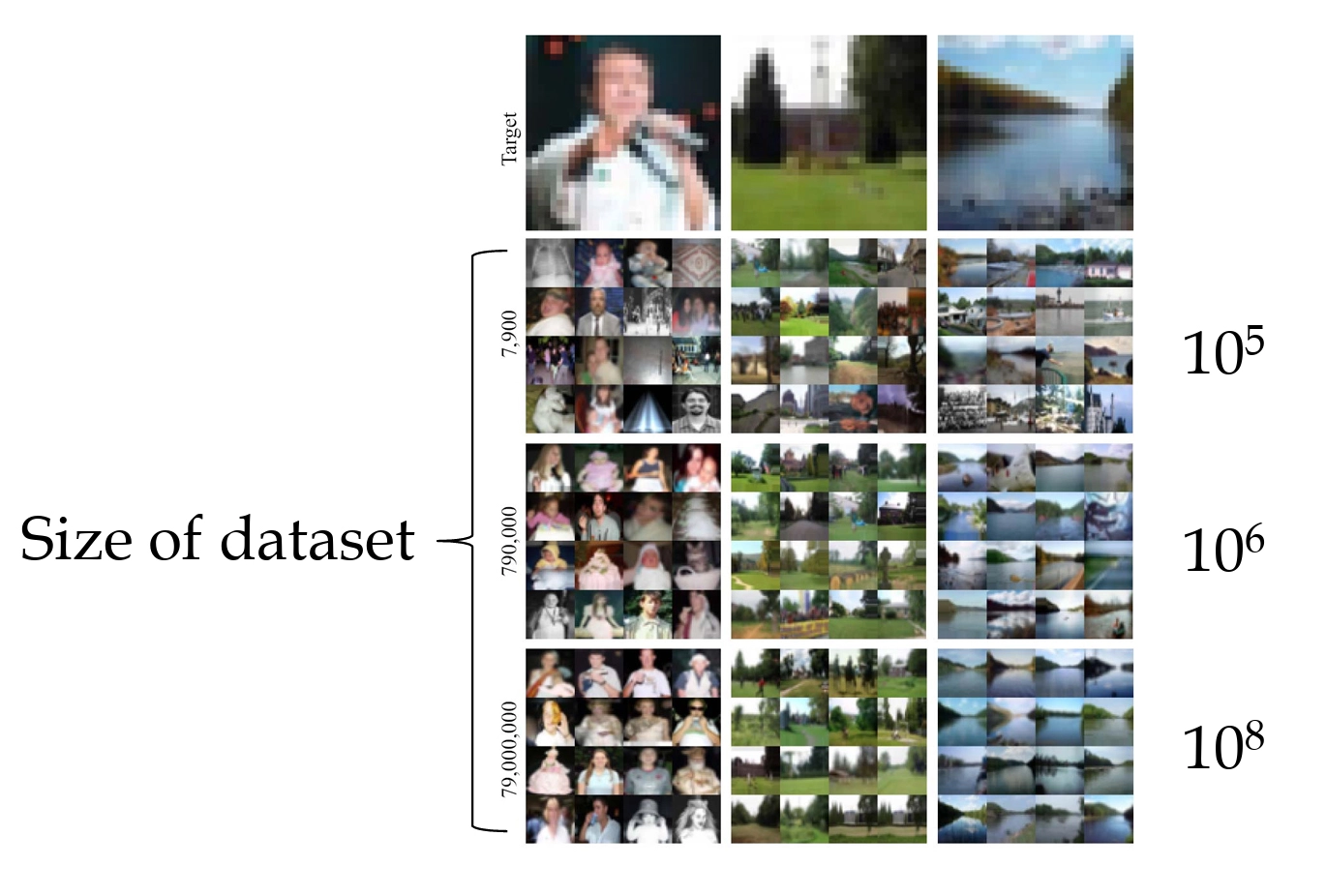

The 80 Million Tiny Images dataset, developed in 2008 by MIT, contained 80 million low-resolution images across tens of thousands of categories to train early AI vision models. Built via automated web scraping using WordNet’s taxonomy, it lacked human oversight, leading to offensive labels such as racial slurs, exposed by Abeba Birhane’s 2020 audit. MIT retracted the dataset, underscoring the need for corporate leaders to enforce ethical data practices.

QUEST: And how does the system link offensive words to images of certain groups?

Abeba Birhane: The dataset contained 80 million images labelled with textual description. During and after scrapping, nobody checked the accuracy of those labels whether the label was offensive or accurate. It was a fully automated process that attached slurs to people’s images without any safeguards.

QUEST: It sounds like human oversight is critical. Has the industry learned from this, and what other biases might companies face?

Abeba Birhane: Exactly. Yet the field of AI often follows the motto “move fast and break things.” Dataset curation has scaled up rapidly since the time of TinyImages, but many of the same problems persist. Rather than learning from past mistakes, the focus is still on building larger datasets and bigger models. Bias and racism continue to appear, not only in image datasets but also everywhere in the AI lifecycle.

Language models for example, correlate professions in a stereotypical way that adhere to outdated traditional norms. For companies, this can translate into biased recommendation systems, unfair lending decisions, or discriminatory hiring practices.

QUEST: Many people worry about AI’s irreversible risks, even human extinction. What’s your take on this?

Abeba Birhane: This gives me the opportunity to clarify one of the biggest misunderstandings about AI. The idea that AI systems will become fully autonomous and cause human extinction is science fiction. AI systems are human through and through and dependent on humans for successful operation and maintenance. From training data to building neural networks to running energy-intensive data centers—these are all labor-intensive, human-driven processes. When human input stops, AI systems collapse. There is no self-sustaining AI. So fears about rogue AI taking control are unfounded. The real risks lie in how humans design, deploy, and use AI—often without adequate oversight.

QUEST: With AI’s rapid growth, how can we balance innovation with responsibility? Is regulation the answer?

Abeba Birhane: Innovation vs regulation is a false and unhelpful dichotomy. Regulation is absolutely essential for good innovation. Every major industry—food, pharmaceuticals, consumer electronics—is governed by strict regulatory guidelines. Yet AI, despite its profound societal impact, remains largely unregulated. This is a serious gap. Regulatory frameworks should not be seen as stifling innovation but as enabling safe, careful innovation. By setting clear standards for testing, accountability, and responsibility, regulation ensures AI serves society rather than harms it.

QUEST: Thank you, Abeba, for these valuable insights into navigating AI’s ethical challenges.

WordNet, developed in 1985 at Princeton, is a lexical database organizing over 155,000 English word sets into hierarchies for natural language processing and computer vision.

Datasets such as ImageNet and 80 Million Tiny Images relied on WordNet, inheriting its biases. Birhane’s audits revealed racist and sexist categorizations, showing the importance of scrutinizing foundational AI tools.